Researchers at TU Berlin are currently working on a robot system that leaves the rigid processes of traditional programs behind. Instead of fixed step-by-step commands, this robot uses a model that understands the relationships in the world as a network – and independently decides what to do.

The researchers teach the system, so to speak, how things relate to each other. This allows the robot to be given a goal, such as: “The drawer should be open.” It then plans and calculates how to achieve this goal itself on an ongoing basis.

Unlike conventional approaches, which prescribe every move and every movement precisely, this robot is only given a target condition.

The sequence in between – i.e., where it goes, how it grasps, and when it pulls – is not preprogrammed but decided anew at each step. This makes the system particularly suitable for use in real environments that are full of uncertainties and constantly changing. This is precisely where rigid programs often reach their limits.

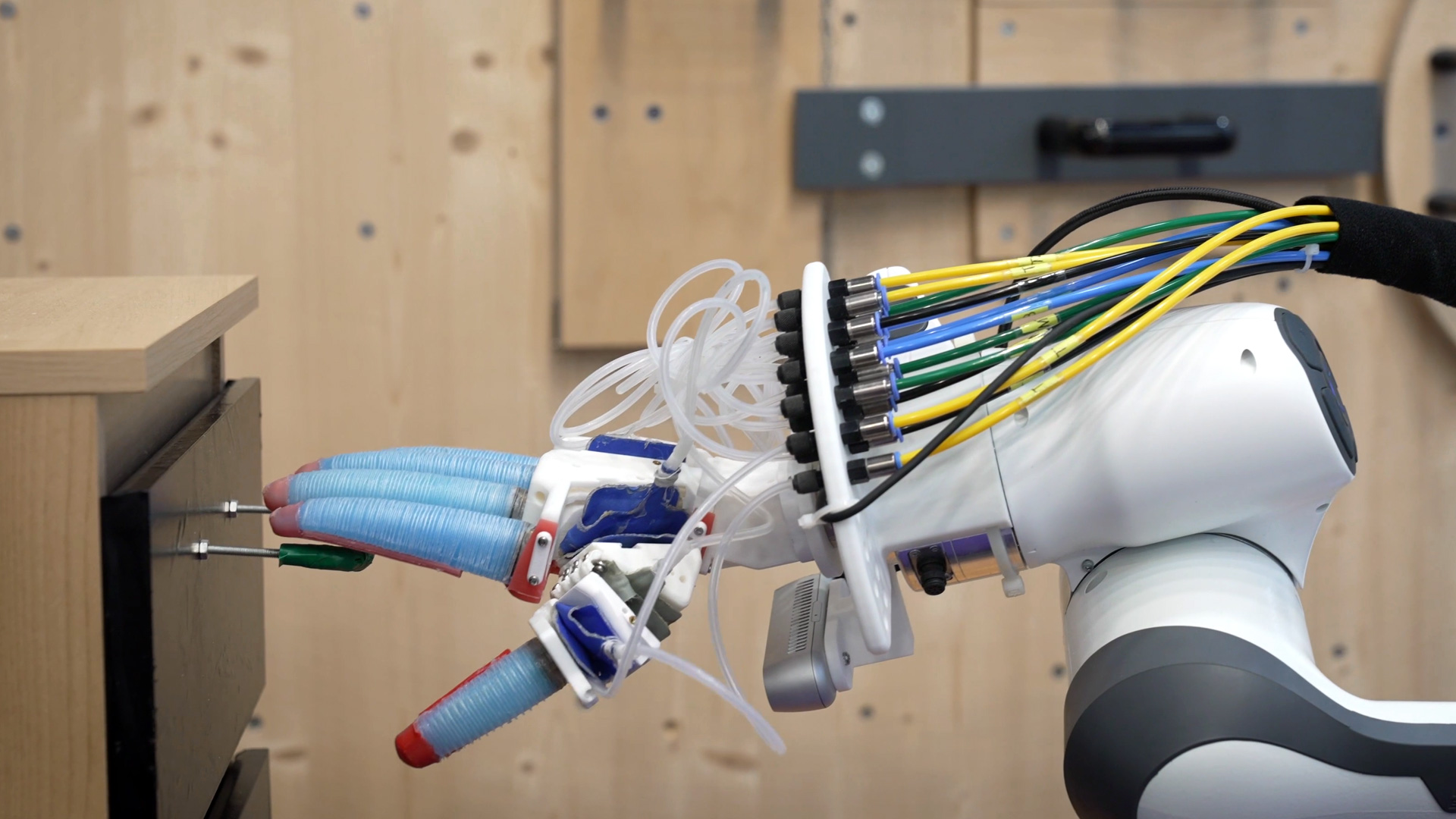

The researchers demonstrate this with an example: First, the robot uses its cameras to search for a green drawer. It processes image data, triangulates the location, and thus determines the grip point in space.

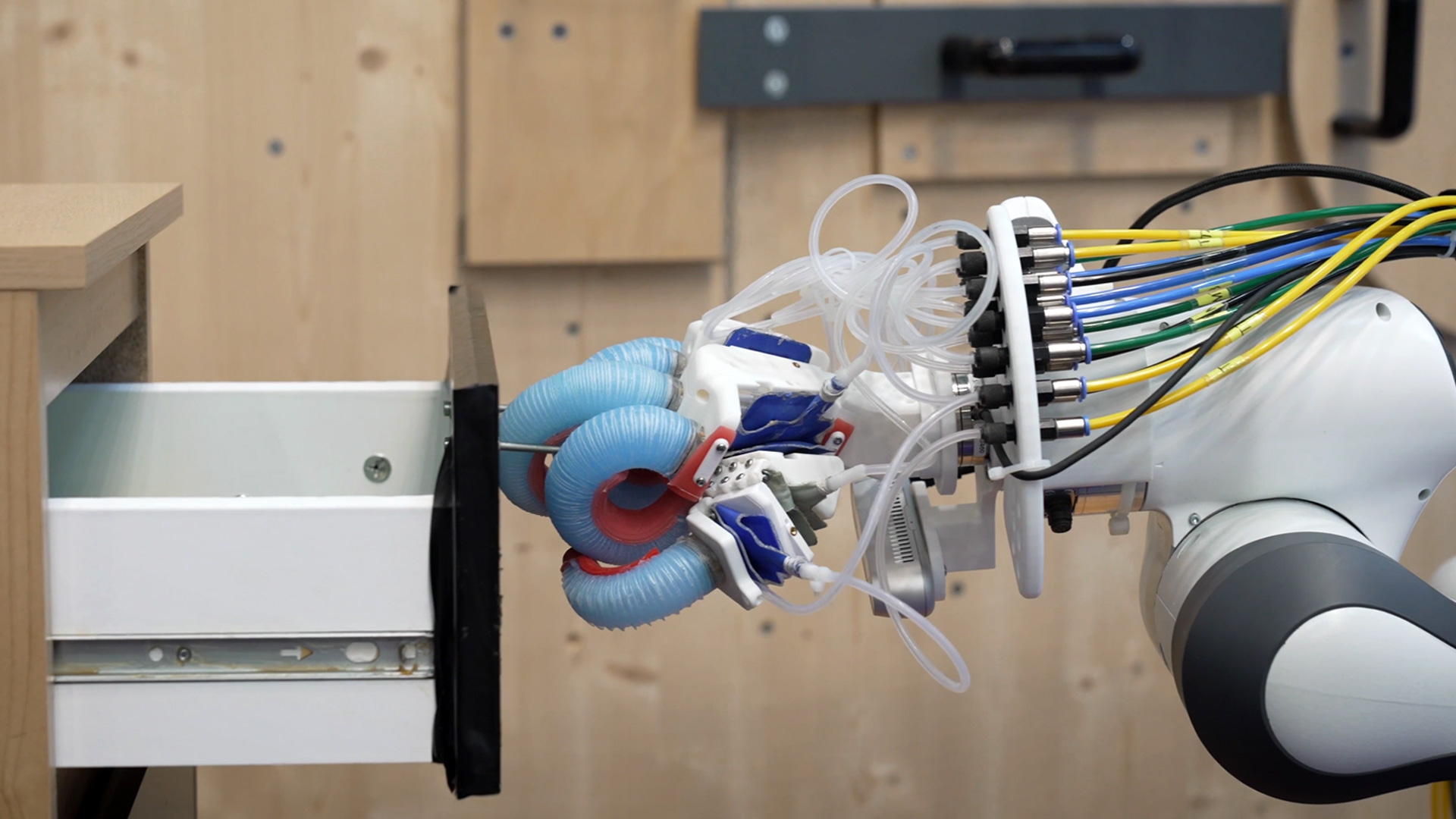

Once it has positioned itself securely, it grasps and pulls open the drawer. But that's not all: if the drawer slips out of its hand or a person unexpectedly pushes it back, the system immediately recognizes that its previous perception of the world is no longer correct.

It then immediately starts again to locate the handle and open the drawer once more. This reaction is not hard-coded – it arises dynamically from the internal rules and the state that is recognized in each case.

This approach differs significantly from conventional machine vision algorithms. Many systems need to see millions of images of drawers and gripping processes to learn what to do. The Berlin solution does not require this.

Instead, it uses graphs that represent internal dependencies, such as the fact that a drawer can only be opened if it has been grasped first. This logic is sufficient to respond flexibly to completely new situations without the robot ever having seen the exact scenario before.

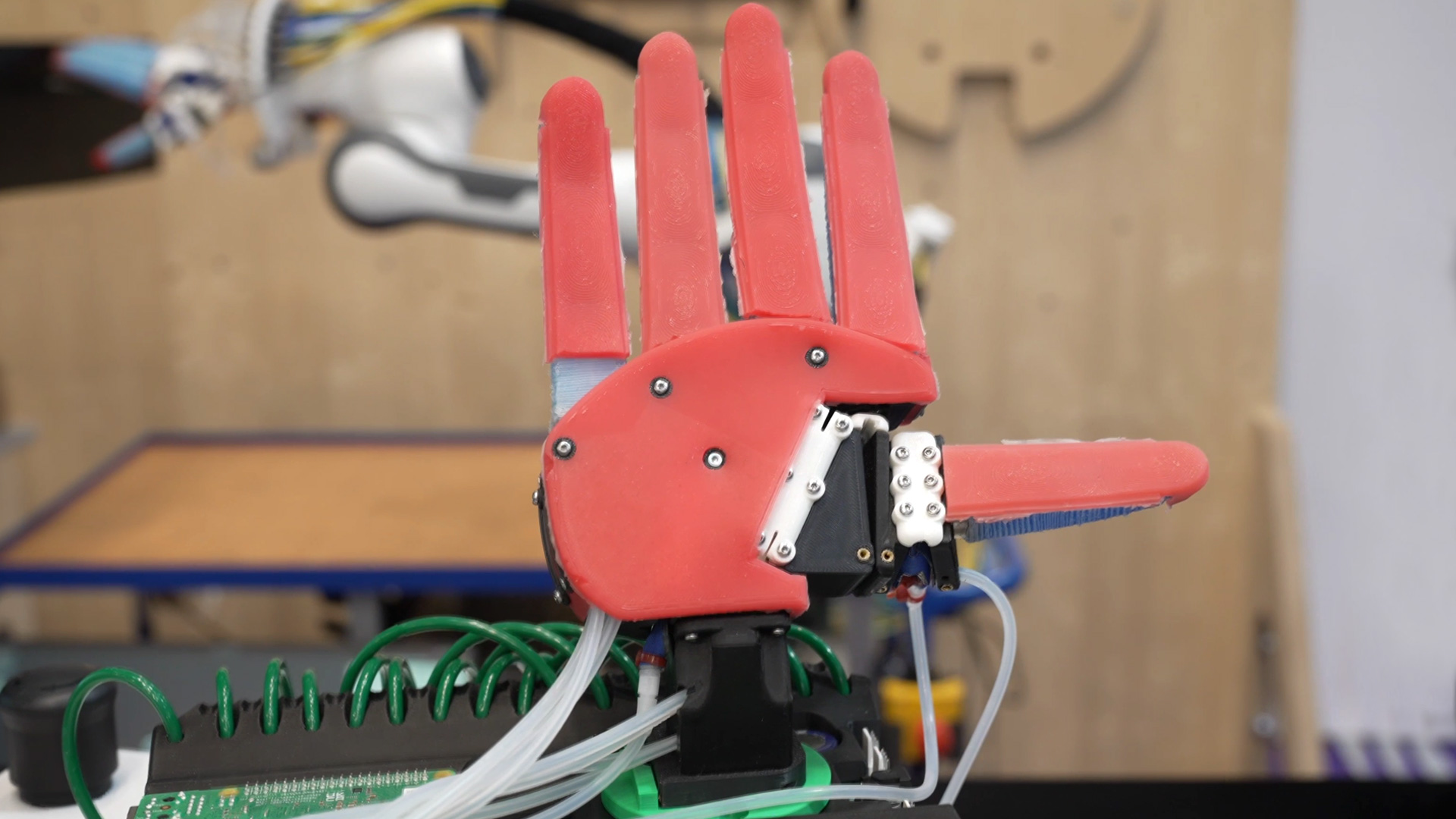

Currently, the researchers are mainly demonstrating simple scenes: the robot is supposed to open a drawer – and it manages to do so even if someone makes its job more difficult. But the approach is designed for more.

Longer, more complex tasks are conceivable, such as first identifying an object and then placing it in the open drawer. The team is still experimenting to implement such long-term planning robustly.

Another exciting idea is that robots could learn their dependency graphs themselves in the future through “learning from demonstration.” This would mean that they would no longer just follow predefined rules, but would build their models from observations – and could thus respond even more flexibly to new requirements.

The ultimate goal is a robot that can perform a wide variety of tasks without having to be reprogrammed each time. One that remains flexible when opening drawers, tidying up, or performing other tasks, and can also cope with unforeseen disruptions.

This could make robotics significantly more robust and universal in the future—whether in production, care, or simply in the household.